Key Concepts

Before diving into integration, it’s important to understand the core ideas behind how Moveo One works — what it measures, how it processes data, and how this enables both UX analytics and predictive modeling.

How Moveo One Works

Moveo One captures user interactions across your digital product — every tap, scroll, or click — and organizes them into sessions.

Each session represents a continuous period of user activity within your app or website.

After a session ends, Moveo One processes the recorded data to compute cognitive and behavioral features, such as hesitation, rhythm, and navigation effort.

These features are then used to understand user experience quality and, optionally, to power predictive models.

Core Concepts

🧩 Context

The context Represents large, independent parts of the application. Serves to divide the app into functional units that can exist independently. Examples: onboarding, main_app_flow, checkout_process.

🕒 Session

A session is a collection of related events grouped together in time.

Sessions usually start when the app is opened (or a user visits the site) and end after a period of inactivity.

Each session represents a full interaction story — from entry point to exit — and serves as the fundamental unit for analytics and model training.

🧠 Semantic Group

A semantic group organizes events by meaning rather than by code.

For example:

click_login_buttonandtap_login_button→ belong to the authentication groupscroll_feedandload_more_posts→ belong to the content consumption group

This grouping allows Moveo One to understand why an interaction occurred, not just what the user did.

💡 Tip (Mobile Apps): In mobile applications, semantic groups typically correspond to screens — such as Home, Profile, or Checkout.

💡 Tip (Websites): On websites, semantic groups are usually detected automatically and often represent sections of a page — such as Header, Product Details, or Footer.

Metadata

Moveo One distinguishes between standard metadata and additional metadata, both of which enrich how events and sessions are interpreted.

🧾 Standard Metadata

Standard metadata includes all environmental and contextual factors that can influence user experience, such as:

locale(e.g.,en_US,fr_FR)screen_sizeor device resolutionfeatureflags or active experiment variantab_test_group(from your A/B testing setup)app_version,os, orbrowser

These parameters help explain differences in UX performance across user segments — for instance, why one A/B group performs better than another, or why a specific OS version has higher friction.

🧩 Additional Metadata

Additional metadata is everything else you choose to attach to a session for analytical or segmentation purposes, such as:

- Demographics (e.g., age group, region)

- Device or network category (e.g., mobile vs. desktop, Wi-Fi vs. LTE)

- Manual enrichments (e.g., user plan, subscription type)

- Referral sources (campaigns, partner URLs, traffic origin)

Additional metadata helps you create custom dimensions in analytics — without affecting UX scoring or model calculations.

For example, you might compare “premium” vs. “free” users, or analyze which referral channels produce higher engagement.

Events

Each user action is captured as an event.

An event represents an observable interaction such as a click, scroll, page load, or form submission that is performed over some element.

Event Types

Every event has a type — a general category of elemnt type (e.g., button, checkbox, stepper, image, custom).

Full list of supported event types here.

button

text

textEdit

image

images

image_scroll_horizontal

image_scroll_vertical

picker

slider

switchControl

progressBar

checkbox

radioButton

table

collection

segmentedControl

stepper

datePicker

timePicker

searchBar

webView

scrollView

activityIndicator

video

videoPlayer

audioPlayer

map

tabBar

tabBarPage

tabBarPageTitle

tabBarPageSubtitle

toolbar

alert

alertTitle

alertSubtitle

modal

toast

badge

dropdown

card

chip

grid

custom

Event Actions

Event actions describe what the user actually did — e.g., click, rotate, dragEnd, custom.

Full list of supported event actions is here.

click

view

appear

disappear

swipe

scroll

drag

drop

tap

doubleTap

longPress

pinch

zoom

rotate

submit

select

deselect

hover

focus

blur

input

valueChange

dragStart

dragEnd

load

unload

refresh

play

pause

stop

seek

error

success

cancel

retry

share

open

close

expand

collapse

edit

custom

Event Content

Each event in Moveo One carries content context — information about what element was interacted with and, when relevant, what content it contained.

This allows Moveo One to interpret the semantic meaning of interactions rather than just recording raw clicks or taps.

For example:

- For labels or buttons, the content is the displayed text (e.g.,

"Buy Now","Continue"). - For text fields, it’s the text entered by the user (e.g.,

"search query"or"feedback"). - For media or list items, it might represent the title, ID, or source of the interacted element.

By associating actions with their visible or logical content, Moveo One can understand where the interaction occurred and what intent it represented — such as “clicked checkout button” or “edited profile name field.”

⚠️ Privacy Notice:

Moveo One automatically obfuscates content data at rest — meaning no raw text or user-entered information is stored in its original form.

However, it is strongly recommended that you avoid tracking any ultimately private or personally identifiable data, such as:

- Personal information (names, emails, phone numbers)

- Medical or health-related details

- Passwords or authentication tokens

The goal is to capture interaction meaning, not sensitive user content.

Why We Track Appear, Click, and Scroll

These interaction types form the behavioral foundation for understanding user experience (UX):

| Event | Insight Example |

|---|---|

appear | Measures which content is visible and for how long |

click / tap | Captures intent and navigation patterns |

scroll | Reveals attention depth, frustration loops, or hesitation |

input | Identifies points of friction in forms or text fields |

Together, these allow Moveo One to detect where users struggle, where attention drops, and where flows break down.

What Happens After Tracking

Once a session ends (for example, when a user closes the app or becomes inactive), Moveo One:

- Processes the collected event stream

- Extracts behavioral and cognitive features

- Stores them in a structured schema

- Makes them available for analytics and model building

This enables both human-readable insights and machine learning–based predictions.

What This Enables

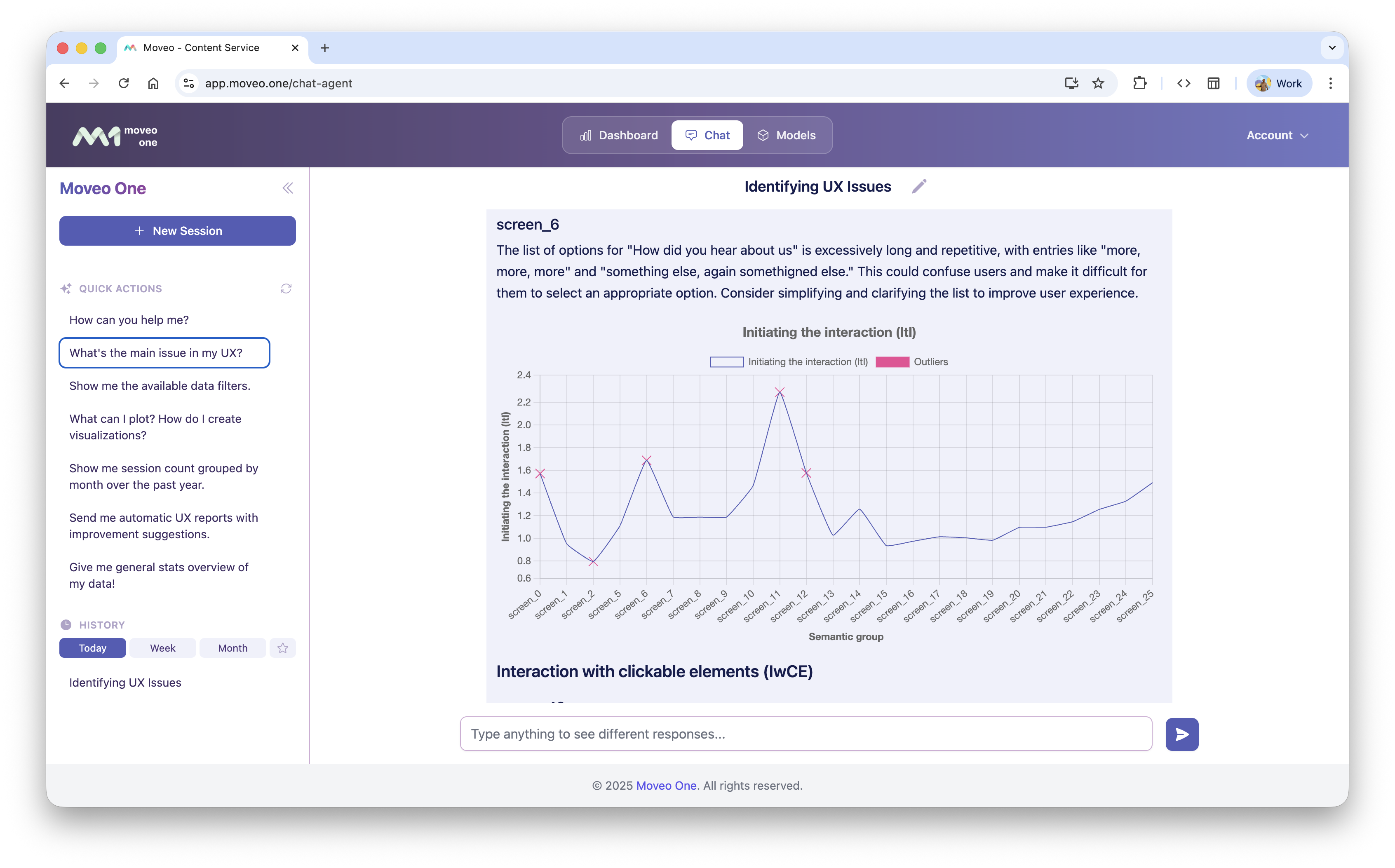

1. UX Analytics

Understand how users behave and why they struggle:

- Identify drop-offs, hesitation, or frustration patterns

- Detect confusing UI elements or long task durations

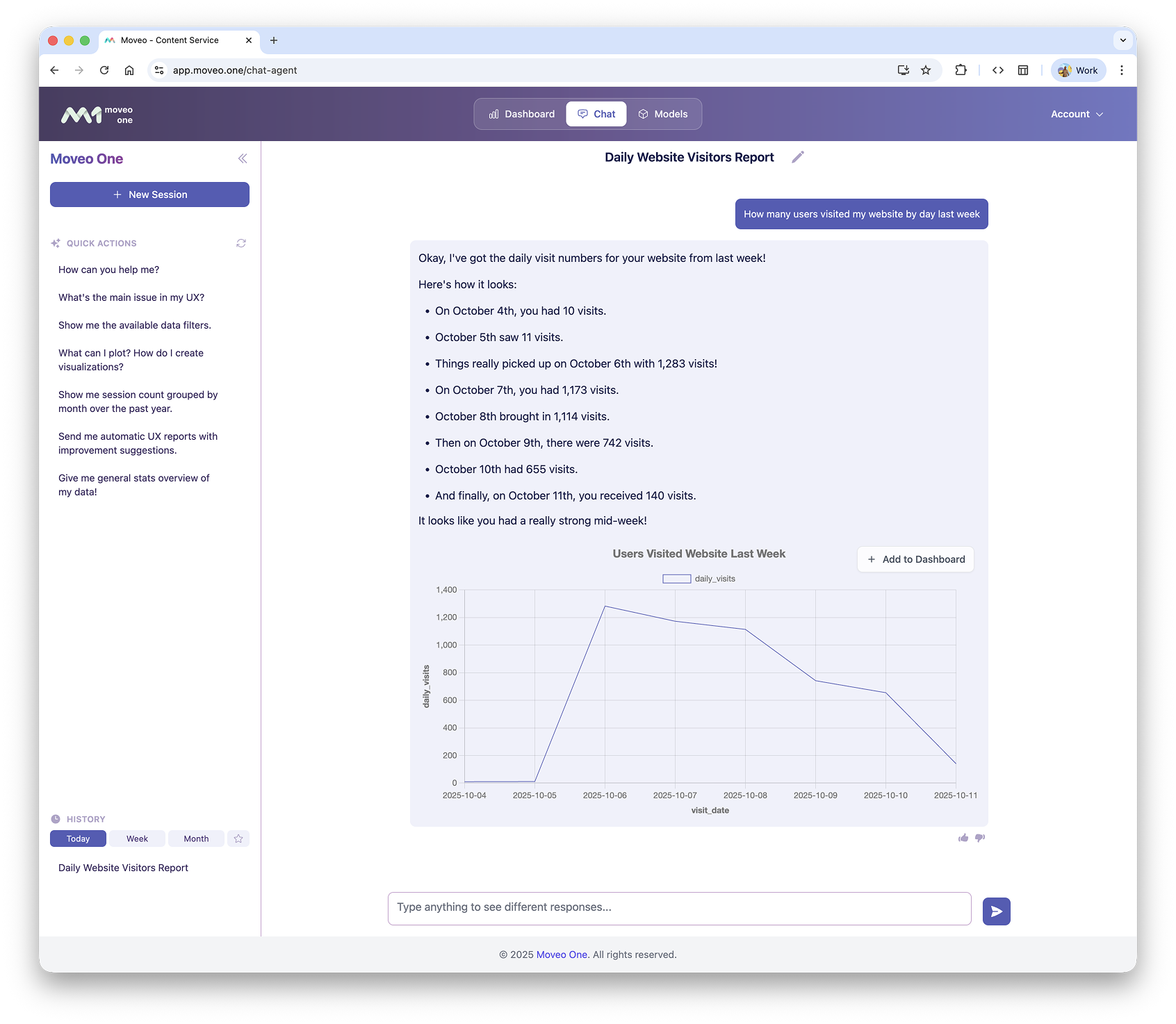

2. Standard Analytics (Out of the Box)

Moveo One automatically supports classic metrics such as:

- Number of visits

- Session duration

- Active users over time

- Top screens and features

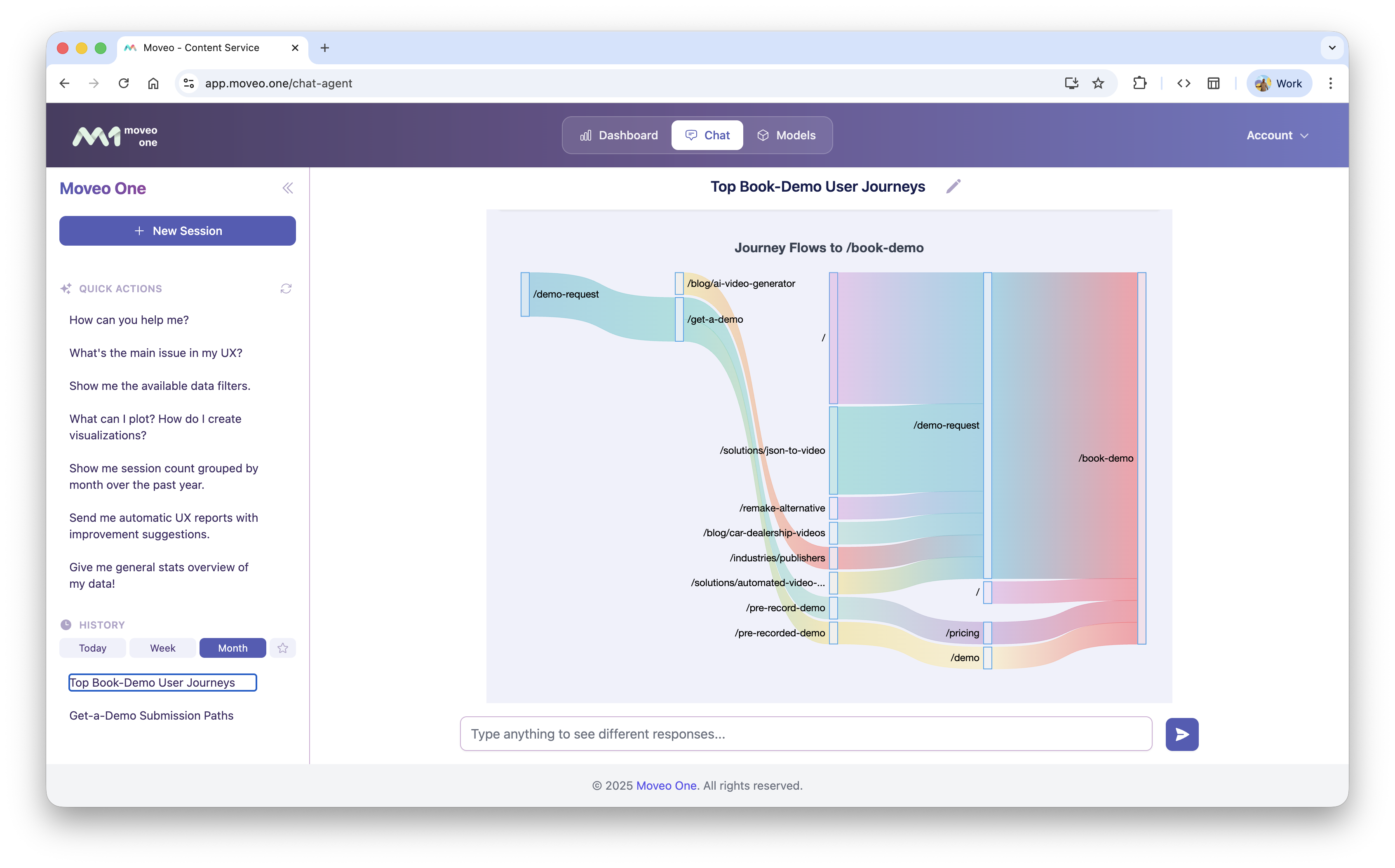

3. User Journey Identification

By mapping event sequences and semantic groups, Moveo One can identify user journeys and key behavioral patterns, like onboarding completion, cart abandonment, or content discovery.

Predictive Models

In addition to analytics, Moveo One enables you to build and deploy predictive models directly from behavioral data.

These models leverage the same cognitive and interaction features extracted from user sessions to predict what a user is likely to do next — such as completing onboarding, abandoning checkout, or continuing engagement.

⚡ Runtime Predictions:

Moveo One models operate in real time, continuously analyzing live event streams.

Predictions are generated in runtime, typically within 100 ms, allowing you to react instantly — for example, showing adaptive UI hints, triggering retention flows, or optimizing conversion steps while the user is still active.

Key Concepts in Model Building

🎯 Target Event

The target event is what you want to predict.

Examples:

- Completing onboarding (

onboarding_complete) - Performing a checkout (

checkout_complete) - Opening a specific page (

view_pricing_page)

⚙️ Conditional Event (optional)

A conditional event defines a prerequisite condition that must be satisfied for the prediction to be meaningful.

Examples:

- Predict “checkout completion” only after the user added an item to the basket (

add_to_cart) - Predict “profile setup” only after the user opened the settings screen

- Predict “subscription renewal” only if the user visited the pricing page in the last session

This allows fine-grained, situation-aware predictions.

🧪 A/B Testing

When you deploy a predictive model, Moveo One automatically splits live sessions into test and control groups.

- The test group receives model-driven optimizations or interventions.

- The control group behaves normally.

- The dashboard then shows the relative difference between the two, based on your target event.

In the future, more advanced A/B testing setups will be available — allowing multiple model versions and adaptive optimization strategies.

Summary

Moveo One connects low-level user interactions (like clicks and scrolls) with high-level outcomes (like conversions or frustration).

By analyzing behavioral patterns and cognitive effort, it bridges the gap between data collection and understanding user experience — all while enabling predictive, real-time insights.